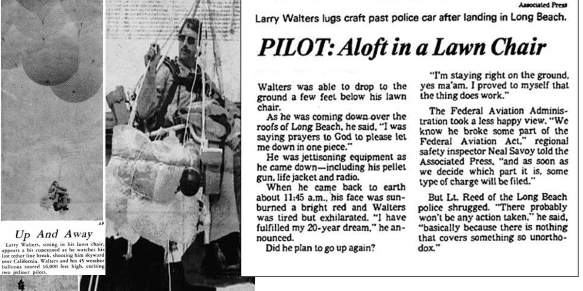

Larry Walters always wanted to fly. When he was old enough, he joined the Air Force, but he could not see well enough to become a pilot. After he was discharged from the military, he would often sit in his backyard watching jets fly overhead, dreaming about flying and scheming about how to get into the sky. On July 2, 1982, the San Pedro, California trucker finally set out to accomplish his dream. Because the story has been told in a variety of ways over a variety of media outlets, it is impossible to know precisely what happened but, as a police officer commented later, “It wasn’t a highly scientific expedition.”

Larry conceived his “act of American ingenuity” while sitting outside in his “extremely comfortable” Sears lawn chair. He purchased weather balloons from an Army-Navy surplus store, tied them to his tethered Sears chair and filled the four-foot diameter balloons with helium. Then, after packing sandwiches, Miller Lite, a CB radio, a camera, a pellet gun, and 30 one-pound jugs of water for ballast – but without a seatbelt – he climbed into his makeshift craft, dubbed “Inspiration I.” His plan, such as it was, called for him to float lazily above the rooftops at about 30 feet for a while, pounding beers, and then to use the pellet gun to explode the balloons one-by-one so he could float to the ground.

But when the last cord that tethered the craft to his Jeep snapped, Walters and his lawn chair did not rise lazily into the sky. Larry shot up to an altitude of about three miles (higher than a Cessna can go), yanked by the lift of 45 helium balloons holding 33 cubic feet of helium each. He did not dare shoot any of the balloons because he feared that he might unbalance the load and fall. So he slowly drifted along, cold and frightened, in his lawn chair, with his beer and sandwiches, for more than 14 hours. He eventually crossed the primary approach corridor of LAX. A flustered TWA pilot spotted Larry and radioed the tower that he was passing a guy in a lawn chair with a gun at 16,000 feet.

Eventually Larry conjured up the nerve to shoot several balloons before accidentally dropping his pellet gun overboard. The shooting did the trick and Larry descended toward Long Beach, until the dangling tethers got caught in a power line, causing an electrical blackout in the neighborhood below. Fortunately, Walters was able to climb to the ground safely from there.

The Long Beach Police Department and federal authorities were waiting. Regional safety inspector Neal Savoy said, “We know he broke some part of the Federal Aviation Act, and as soon as we decide which part it is, some type of charge will be filed. If he had a pilot’s license, we’d suspend that. But he doesn’t.” As he was led away in handcuffs, a reporter asked Larry why he had undertaken his mission. The answer was simple and poignant. “A man can’t just sit around,” he said.

The Inversion Principle

In one of the more glaringly obvious observations of all-time, it is safe to say that Larry’s decision-making process was more than a bit flawed. The Bonehead Club of Dallas awarded him its top prize – Bonehead of the Year – but he only earned an honorable mention from the Darwin Awards people, presumably because, even though things did not turn out exactly as he planned (another glaringly obvious observation), he was incredibly lucky and his flight did not end in disaster. Among his many errors, Larry did not follow the inversion principle popularized in the investment world by Charlie Munger. Charlie borrowed this highly useful idea from the great 19th Century German mathematician Carl Jacobi, who created this helpful approach for improving your decision-making process.

Invert, always invert (“man muss immer umkehren”).

Jacobi believed that the solution for many difficult problems could be found if the problems were expressed in the inverse – by working or thinking backwards. As Munger has explained, “Invert. Always invert. Turn a situation or problem upside down. Look at it backward. What happens if all our plans go wrong? Where don’t we want to go, and how do you get there? Instead of looking for success, make a list of how to fail instead – through sloth, envy, resentment, self-pity, entitlement, all the mental habits of self-defeat. Avoid these qualities and you will succeed. Tell me where I’m going to die, that is, so I don’t go there.” Charlie’s partner, Warren Buffett, makes a similar point: “Charlie and I have not learned how to solve difficult business problems. What we have learned is to avoid them.”

As in most matters, we would do well to emulate Charlie. But what does that mean?

It begins with working backwards, to the extent you can, quite literally. If you have done algebra, you know that reversing an equation is the best way to check your work. Similarly, the best way to proofread is back-to-front, one painstaking sentence at a time. But it also means much more than that.

Thinking in Reverse

Charlie’s inversion principle also means thinking in reverse. As Munger explains it: “In other words, if you want to help India, the question you should ask is not, ‘How can I help India?’ It’s, ‘What is doing the worst damage in India?’”

During World War II, the Allied forces sent regular bombing missions into Germany. The lumbering aircraft sent on these raids – most often B-17s – were strategically crucial to the war effort and were often lost to enemy anti-aircraft fire. That was a huge problem, obviously.

One possible solution was to provide more reinforcement for the Flying Fortresses, but armor is heavy and restricts aircraft performance even more. So extra plating could only go where the planes were most vulnerable. The problem of where to add armor was a difficult one because the data set was so limited. There was no access to the planes that had been shot down. In 1943, the English Air Ministry examined the locations of the bullet holes on the returned aircraft and proposed adding armor to those areas that showed the most damage, all at the planes’ extremities.

The great mathematician Abraham Wald, who had fled Austria for the United States in 1938 to escape the Nazis, was put to work on the problem of estimating the survival probabilities of planes sustaining hits in various locations so that the added armor would be located most expeditiously. Wald came to a surprising and very different conclusion from that proposed by the Air Ministry. Since much of Wald’s analysis at the time was new – he did not have sufficient computing power to model results and did not have access to more recent statistical approaches – his work was ad hoc and his success was due to “the sheer power of his intuition” alone.

Wald began by drawing an outline of a plane and marking it where returning planes had been hit. There were lots of shots everywhere except in a few particular (and crucial) areas, with more shots to the planes’ extremities than anywhere else. By inverting the problem – considering where the planes that didn’t return had been hit and what it would take to disable an aircraft rather than examining the data he had from the returning bombers – Wald came to his unique insight, later confirmed by remarkable (for the time, and long classified) mathematical analysis (more here). Much like Sherlock Holmes and the dog that didn’t bark, Wald’s remarkable intuitive leap came about due to what he didn’t see (that Wald’s insight seems obvious now is a wonderful illustration of hindsight bias).

Wald realized that the holes from flak and bullets most often seen on the bombers that returned represented the areas where planes were best able to absorb damage and survive. Since the data showed that there were similar areas on each returning B-17 showing little or no damage from enemy fire, Wald concluded that those areas (around the main cockpit and the fuel tanks) were the truly vulnerable spots and that these were the areas that should be reinforced.

From a mathematical perspective, Wald considered what might have happened to account for the data he possessed. Therefore, what he did was to set the probability that a plane that took a hit to the engine managed to stay in the air to zero and thought about what that would mean. In other words, conceptually, he assumed that any hit to the engine would bring the plane down. Because planes returned from their missions with bullet holes everywhere but the engine, the other alternative was that planes were never hit in the engine. Thus, either the German gunfire hit every part of the plane but one, or the engine was a point of extreme vulnerability. Wald considered both possibilities, but the latter made much more sense.

The more useful data was in the planes that were shot down and unavailable, not the ones that survived, and had to be “gathered” by Wald via induction. This insight lies behind the related concepts we now call survivorship bias – our tendency to include only successes in statistical analysis, skewing or even invalidating the results – and selection bias – the distortions we see when the sample selection does not accurately reflect the target population. Thus, the fish you observe in a pond will almost certainly correspond to the size of the holes in your net. Inverting the problem allowed Wald to come to the correct conclusion, saving many planes (and lives).

This idea applies to baseball too. As I have argued before, the crucial insight of Moneyball was a “Mungeresque” inversion. In baseball, a team wins by scoring more runs than its opponent. The epiphany was to invert the idea that runs and wins were achieved by hits to the radical notion that the key to winning is avoiding outs. That led the story’s protagonist, general manager of the Oakland A’s Billy Beane, to “buy” on-base percentage cheaply because the “traditional baseball men” overvalued hits but undervalued on-base percentage even though it does not matter how a batter avoids making an out and reaches base.

Therefore, the key application of the Moneyball insight was for Beane to find value via underappreciated player assets (some assets are cheap for good reason) by way of an objective, disciplined, data-driven process that values OBP more than conventional baseball wisdom. In other words, as Michael Lewis explained, “it is about using statistical analysis to shift the odds [of winning] a bit in one’s favor” via market inefficiencies. As A’s Assistant GM Paul DePodesta said, “You have to understand that for someone to become an Oakland A, he has to have something wrong with him. Because if he doesn’t have something wrong with him, he gets valued properly by the marketplace, and we can’t afford him anymore.” Accordingly, Beane sought out players that he could obtain cheaply because their actual (statistically verifiable) value was greater than their generally perceived value.

The great Howard Marks has also applied this idea to the investing world:

“If what’s obvious and what everyone knows is usually wrong, then what’s right? The answer comes from inverting the concept of obvious appeal. The truth is, the best buys are usually found in the things most people don’t understand or believe in. These might be securities, investment approaches or investing concepts, but the fact that something isn’t widely accepted usually serves as a green light to those who’re perceptive (and contrary) enough to see it.”

The key investment application of the inversion principle, therefore, is that in most cases we would be better served by looking closely at the examples of people and portfolios that failed and why they failed instead of the success stories, even though such examples are unlikely to give rise to book contracts with six-figure advances. Similarly, we would be better served by examining our personal investment failures than our successes. Instead of focusing on “why we made it,” we would be better served by careful failure analysis and fault diagnosis. That is where the best data is and where the best insight may be inferred.

The smartest people may always question their assumptions to make sure that they are justified. The data set that was available to Wald was not a good sample. By inverting his thinking, Wald could more readily hypothesize and conclude that the sample was lacking.

Don’t Be Stupid

The inversion principle also means taking a step back (so to speak) to consider your goals in reverse. Our first goal, therefore, should not be to achieve success, even though that is highly intuitive. Note, for example, this recent list of 2017’s smartest companies, which focuses on “breakthrough technologies” and “successful” innovations. Instead, our first goal should be to avoid failure – to limit mistakes. Instead of trying so hard to be smart, we should invert that and spend more energy on not being stupid, in large measure because not being stupid is far more achievable and manageable than being brilliant. In general, we would be better off pulling the bad stuff out of our ideas and processes than trying to put more good stuff in.

As Munger has stated, “I think part of the popularity of Berkshire Hathaway is that we look like people who have found a trick. It’s not brilliance. It’s just avoiding stupidity.” Here is a variation: “we know the edge of our competency better than most. That’s a very worthwhile thing.” Buffett has a variation on this theme too: “Rule No. 1: Never lose money. Rule No. 2: Never forget rule No. 1.” Another is to be fearful when others are greedy and greedy when others are fearful. George Costanza has his own unique iteration (“If every instinct you have is wrong, then the opposite would have to be right”).

If we avoid mistakes we will generally win. By examining failure more closely, we will have a better chance of doing precisely that. Basically, negative logic works better than positive logic. What we know not to be true is much more robust that what we know to be true. Note how Michelangelo thought about his master creation, the David. He always believed that David was within the marble he started with. He merely (which is not to say that it was anything like easy) had to chip away that which was not David. “In every block of marble I see a statue as plain as though it stood before me, shaped and perfect in attitude and action. I have only to hew away the rough walls that imprison the lovely apparition to reveal it to the other eyes as mine see it.” By chipping away at what “did not work,” Michelangelo uncovered a masterpiece. There are not a lot of masterpieces in life, but by avoiding failure, we give ourselves the best chance of overall success.

As Charley Ellis famously established, investing is a loser’s game much of the time (as I have also noted before) – with outcomes dominated by luck rather than skill and high transaction costs. Charley employed the work of Simon Ramo, a scientist and statistician, from Extraordinary Tennis for the Ordinary Player, who showed that professional tennis players and weekend tennis players play a fundamentally different game. The expert player, playing another expert player, needs to win points affirmatively through good shot-making to succeed. The weekend player wins by not losing – keeping the ball in play until his or her opponent makes an error, because weaker players make many more errors.

“In expert tennis, about 80 per cent of the points are won; in amateur tennis, about 80 per cent of the points are lost. In other words, professional tennis is a Winner’s Game – the final outcome is determined by the activities of the winner – and amateur tennis is a Loser’s Game – the final outcome is determined by the activities of the loser. The two games are, in their fundamental characteristic, not at all the same. They are opposites.”

As Charlie wrote in a letter to Wesco Shareholders while he was chair of the company: “Wesco continues to try more to profit from always remembering the obvious than from grasping the esoteric. … It is remarkable how much long-term advantage people like us have gotten by trying to be consistently not stupid, instead of trying to be very intelligent. There must be some wisdom in the folk saying, `It’s the strong swimmers who drown.’”

Moreover, it turns out that we can quantify this idea more precisely.

As Phil Birnbaum brilliantly suggested in Slate, not being stupid matters demonstrably more than being smart when a combination of luck and skill determines success. Suppose you are the GM of a baseball team and you are preparing for the annual draft. Avoiding a mistake helps more than being smart.

Suppose you have the 15th pick in the draft. You look at a player the Major League consensus says is the 20th best player and think he is better than that – perhaps the 10th best player. By contrast, the MLB consensus on another player is that he is the 15th best player but you think he is only the 30th best. What are the rewards and consequences if you are right about each player when the draft comes?

If the underrated player is available when your pick comes, you can snap him up for an effective gain of five spots. You get the 10th best player with the 15th pick. That is great. Of course, since everybody else is scouting too, you may not be the only one who recognizes the underrated player’s true value. Anybody with a pick ahead of you can steal your thunder. If that happens, your being smart did not help a bit.

If the overrated player is available when your turn comes up (in theory, he should be because he is the consensus 15th pick and you are picking 15th), you will pass on him, because you know he is not that good. If you had not done the scouting and done it right, you would have taken him with your 15th pick and suffered an effective loss of 15 spots by getting the 30th best player with the 15th pick. In that case, then, avoiding a mistake helped.

Moreover, and crucially, it does not matter if other teams scouted him correctly. You have dodged a bullet no matter what. Recognizing the undervalued player (being smart) only helps when you are alone in your recognition. Recognizing the overrated player (avoiding a mistake) always helps. Birnbaum’s moral: “You gain more by not being stupid than you do by being smart. Smart gets neutralized by other smart people. Stupid does not.” Thus, the importance of the error quotient becomes obvious (obviously, the lower the better).

The same principle can also be demonstrated mathematically, as Birnbaum also noted. Gather ten people and show them a jar that contains equal numbers of $1, $5, $20, and $100 bills. Pull one out, at random, so nobody can see, and auction it off. If the bidders are generally smart, the bidding should top out at just below $31.50 (how much less will depend on the extent of the group’s loss aversion), the value of the average bill {(1+5+20+100) ÷ 4}. If you repeat the process but this time let two prospective bidders see the bill you picked, what happens? If you picked a $100 bill, the insiders should be willing to pay up to $99.99 for the bill. Neither of them will benefit much from the insider knowledge. However, if it is a $1 bill, neither of the insiders will bid. Without that knowledge, each of the insiders would have had a one-in-10 chance of paying $31.50 for the bill and suffering a loss of $30.50. On an expected value basis, each gained $3.05 from being an insider. Avoiding errors matters more than being smart.

That investing successfully is really hard suggests to most of us that being really smart should be a big plus in investing. Yet while it can help, the existence of other smart people together with copycats and hangers-on greatly dilutes the value of being market-smart. On the other hand, the impact of bad decision-making stands alone. It is not lessened by the related stupidity of others. In fact, the more people act stupidly together, the greater the aggregate risk and the greater the potential for loss. This risk grows exponentially. Think of everyone piling on during the tech or real estate bubbles. When nearly all of us make the same kinds of poor decisions together – when the error quotient is especially high – the danger becomes enormous.

Science

Science is perhaps the quintessential inversion. It is the most powerful tool there is for determining what is real and what is true, and yet it advances only by ascertaining what is false. In other words, it works due to disconfirmation rather than confirmation. As Munger observed about Charles Darwin: “Darwin’s result was due in large measure to his working method, which violated all my rules for misery and particularly emphasized a backward twist in that he always gave priority attention to evidence tending to disconfirm whatever cherished and hard-won theory he already had. In contrast, most people early achieve and later intensify a tendency to process new and disconfirming information so that any original conclusion remains intact. They become people of whom Philip Wylie observed: ‘You couldn’t squeeze a dime between what they already know and what they will never learn.’”

The Oxford English Dictionary defines the scientific method as “a method or procedure that has characterized natural science since the 17th century, consisting in systematic observation, measurement and experiment, and the formulation, testing, and modification of hypotheses.” Science is about making observations and then asking pertinent questions about those observations. What it means is that we observe and investigate the world and build our knowledge base on account of what we learn and discover, but we check our work at every point and keep checking our work. It is inherently experimental. In order to be scientific, then, our inquiries and conclusions must be based upon empirical, measurable evidence. We will never just “know.”

The scientific method, broadly construed, can and should be applied not only to traditional scientific endeavors, but also, to the fullest extent possible, to any sort of inquiry into or study about the nature of reality, including investing. As I have noted before, the great physicist and Nobel laureate Richard Feynman even applied such experimentation to hitting on women. To his surprise, he learned that he (at least) was more successful by being aloof than by being polite or by buying a woman he found attractive a drink.

David Wootton’s brilliant book, The Invention of Science, makes a compelling case that modernity began with the scientific revolution in Europe, book-ended by Danish astronomer Tycho Brahe’s identification of a new star in the heavens in 1572, which proved that heavens were not fixed, and the publication of Isaac Newton’s Opticks in 1704, which drew conclusions based upon experimentation. In Wootton’s view, this was “the most important transformation in human history” since the Neolithic era and in no small measure predicated upon a scientific mindset, which includes the unprejudiced observation of nature, careful data collection, and rigorous experimentation. In his view, the “scientific way of thinking has become so much part of our culture that it has now become difficult to think our way back into a world where people did not speak of facts, hypotheses and theories, where knowledge was not grounded in evidence, where nature did not have laws.” I think Wootton’s claim is surely true, even if honored mainly in the breach.

The scientific approach was truly a new way of thinking (despite historical antecedents). Wootton shows that when Christopher Columbus came to the New World in 1492, he did not have a word to describe what he had done (or at least appeared to have done, with apologies to the Vikings). It was the Portuguese, the first global imperial power, who introduced the term “discovery” in the early 16th Century. There were other new words and concepts that were also important when trying to understand the scientific revolution, such as “fact” (only widely used after 1663), “evidence” (incorporated into science from the legal system) and “experiment.”

As Wootton explains, knowledge, as it was espoused in medieval universities and monasteries, was dominated by the ancients, the likes of Ptolemy, Galen, and Aristotle. Accordingly, it was widely believed that all of the most important knowledge was already known. Thus, learning was predominantly a backward-facing pursuit, about returning to ancient first principles, not pushing into the unknown. Indeed, Wootton details the emergence of fact and evidence as previously unknown terms of art. The modern scientific pursuit is the “formation of a critical community capable of assessing discoveries and replicating results.”

In its broadest context, science is the careful, systematic and logical search for knowledge, obtained by examination of the best available evidence and always subject to correction and improvement upon the discovery of better or additional evidence. That is the essence of what has come to be known as the scientific method, which is the process by which we, collectively and over time, endeavor to construct an accurate (that is, reliable, consistent and non-arbitrary) representation of the world. Otherwise (per James Randi), we are doing magic, and magic simply does not work.

Aristotle, brilliant and important as he was, posited, for example, that heavy objects fall faster than lighter objects and that males and females have different numbers of teeth, based upon some careful – though flawed – reasoning. But it never seemed to have occurred to him that he ought to check. Checking and then re-checking your ideas or work offers evidence that may tend to confirm or disprove them. By collecting “a long-term data set,” per field biologist George Schaller, “you find out what actually happens.” Testing can also be reproduced by any skeptic, which means that you need not simply trust the proponent of any idea. You do not need to take anyone’s word for things — you can check it out for yourself. That is the essence of the scientific endeavor.

Science is inherently limiting, however. We want deductive proof in the manner of Aristotle, but have to settle for induction. That is because science can never fully prove anything. It analyzes the available data and, when the force of the data is strong enough, it makes tentative conclusions. Moreover, these conclusions are always subject to modification or even outright rejection based upon further evidence gathering. The great value of facts and data is not so much that they point toward the correct conclusion (even though they do), but that they allow us the ability to show that some things are conclusively wrong.

Science progresses not via verification (which can only be inferred) but by falsification (which, if established and itself verified, provides relative certainty only as to what is not true). That makes it unwieldy. Thank you, Karl Popper. In investing, as in science generally, we need to build our processes from the ground up, with hypotheses offered only after a careful analysis of all relevant facts and tentatively held only to the extent the facts and data allow.

In investing, much like science generally and as in life, if we avoid mistakes we will generally win. We all want to be Michael Burry, an investor who made a fortune because he recognized the mortgage bubble in time to act accordingly. However, becoming Michael Burry starts by not being Wing Chau, an investor of Lawn Chair Larry foolishness who got crushed when the mortgage market collapsed. In fact, we all suffered when the real estate bubble burst. When the error quotient is especially high, our risks grow exponentially. Success starts with avoiding errors and looking at problems and situations differently.

Invert. Always invert.

The is the obvious flaw in the the argument. What is the worst thing that could happing to people? For everyone to do nothing & just watch as humanity collapses in a month.

How is that a flaw? Inverted thinking, in this case, merely suggests that people should continue to do things to help maintain human society.

Pingback: 08/14/17 – Monday’s Interest-ing Reads | Compound Interest-ing!

Pingback: Financial Planner Weekly – Issue 12 – Financial Planner Weekly

Pingback: Happy Hour: Valuation Factors • Novel Investor

Pingback: Weekend reading: Weirdly busy August edition – Frozen Pension

I do not understand the example of the money in the jar.

When it comes to the insiders and their knowledge, I accept that they would bid up to $99.99 if they knew that the note being auctioned off was the $100 one. In other words, the winner of the bid would be $0.01 better off. That is fine! However, when they know that the note being auctioned off is only $1, why do you state that neither would bid? Surely, they should bid to $0.99, because if they did win the bid, they would make the same $0.01 profit!

Could somebody explain also how the example can claim that WITHOUT the knowledge of what the note being auctioned is, there is a one in ten (i.e. 10%) chance of each of them bidding $31.50 for that $1.00 note? Let us assume that they always bid $31.50 (since that is the expected value of the note being auctioned in the long run), then if there are only four types of notes available ($1, $5, $20 and $100), then the odds of either getting the $1 is 25% i.e. one in four. However, given that there are ten bidders, then perhaps this should be adjusted because they will only win the auction once in every ten times i.e. 10%, and so 10% of 25% is only 2.5%, so I am baffled where the one in ten comes from.

Lastly, could somebody explain where the value of the secret knowledge come from to arrive at $3.05?

Thanks.

John

In the case where the bill picked is $1, then each of the 10 bidders has 1/10 chance (assuming winner randomly chosen). That is in the $1 case each has an expected $(1-31.50)/10 = $3.05 return.

I guess the point was that being an insider only helped avoiding the -$3.05 loss, not with the gain from the big bill.

Pingback: Always Invert, Dylann Roof Story, Great Tech Panic, Cop Bank Robber, India Pakistan Partition - TravelBloggerBuzz

Pingback: Artículos recomendados para inversores 206

Pingback: “I’m Joining a Cult!” (said nobody, ever) | Above the Market

Pingback: Always Invert || Warren Buffett, makes a similar point : “Charlie and I have not learned how to solve difficult business problems. What we have learned is to avoid them.” – Talanta Manufaa

Pingback: Getting Better All the Time | Above the Market